Platform

Creators Face New ‘Full Disclosure’ Rules As YouTube Cracks Down On Undeclared AI Alterations

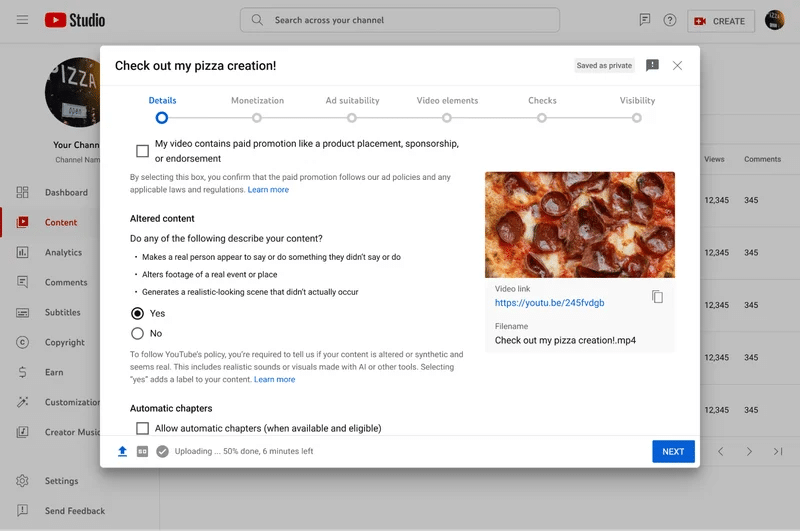

In a move to address growing concerns about the authenticity of online content, YouTube has announced the introduction of a new tool in its Creator Studio. The tool requires creators to disclose when they use generative AI or synthetic media to create realistic content that could be mistaken for authentic material. This initiative aims to strengthen transparency and build trust between creators and their audiences.

“That’s why today, we’re introducing a new tool in Creator Studio requiring creators to disclose to viewers when realistic content – content a viewer could easily mistake for a real person, place, scene, or event – is made with altered or synthetic media, including generative AI,” YouTube has said in a blog post.

The tool requires creators to mark content depicting realistic people, events, places, or scenes that a viewer could mistake for authentic. Examples include digitally altering faces, synthetically generating voices, modifying real-life footage of events or locations, and generating photorealistic fictional scenes.

However, YouTube does not require disclosure when generative AI assists with productivity tasks like scripting, brainstorming ideas, or generating captions. It is also unnecessary to mark unrealistic synthetic content like animation, as well as minor edits like color adjustments, filters, or visual enhancements.

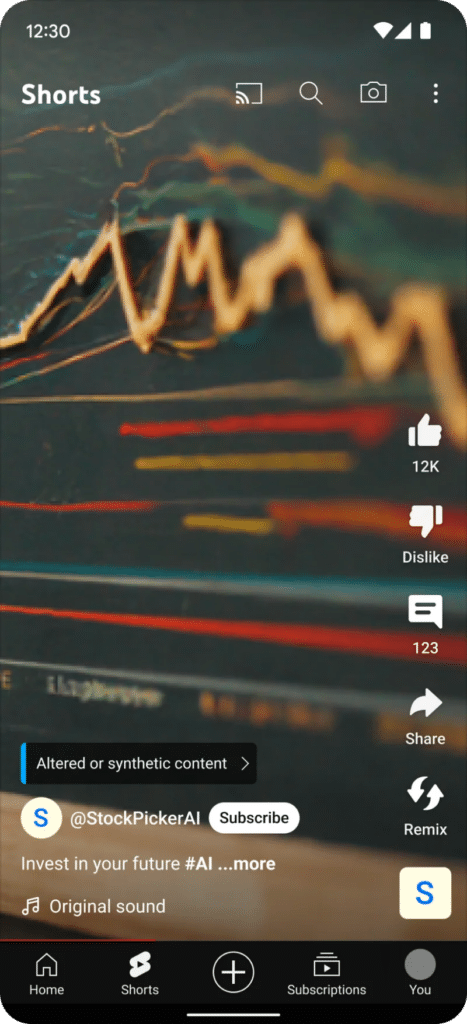

For most videos containing undisclosed alterations, a label will appear in the expanded description. Videos on sensitive topics like health, news, elections, or finance will feature more prominent labels.

The new features roll out across YouTube’s platforms over the coming weeks, per the company, beginning with the mobile app. While allowing time for adjustment, The post also says YouTube may eventually enforce the policy for non-compliant creators, including manually adding labels when undisclosed alterations could mislead viewers.

The changes align with the video platform’s responsible AI principles announced in November, encompassing disclosure requirements, an updated removal process for synthesized individuals, and integrating responsibility into all AI products and features.

YouTube actively collaborates with the Coalition for Content Provenance and Authenticity (C2PA) to increase transparency around digital content industry-wide. In parallel, the company continues developing a process for people to request removing AI-generated content replicating identifiable individuals.

The platform states creators remain central. “Creators are the heart of YouTube, and they’ll continue to play an incredibly important role in helping their audience understand, embrace, and adapt to the world of generative AI,” YouTube points out, viewing the transparency measures as an evolving process to better appreciate how AI empowers human creativity.